White Paper On Best Practices for Software Development in Deep Learning

We congratulate our Kepler team member Per John for having his article on “Best Practices for Software Development in a Deep Learning Environment” published on Towards Data Science. Towards Data Science provides a platform for thousands of people to exchange ideas and to expand the understanding of data science.

For convenience, a copy of the publication is provided below:

Software development: best practices in a deep learning environment

Deep learning systems are now being used extensively in many environments. They differ from traditional software systems in the manner through which output is generated: decisions which are made to produce results are learned from training data, rather than being hand-coded, as is the case with traditional software systems.

This has led people to wrongly believe that software development is not as necessary for machine learning systems, since the algorithms, which are at the heart of any substantial system, are learned by the system itself. Furthermore, state-of-the-art machine learning (ML) is advancing so rapidly that data scientists are focusing primarily on short term goals like model precision. Given that the deep learning world will have changed within a matter of months, why waste time properly engineering your system today? Currently, the lie of the land in deep learning engineering is that a system is experimented together until some precision goal is achieved, and then is put into production.

Even if different from traditional systems, this method of engineering a ML system is pernicious for several reasons:

-

- A mistake can easily be made, thereby producing an incorrect precision. For instance, because of an error in data loading or transformation, or the incorrect handling of training/test/validation sets.

- Reproducing training results is time-costly since the data and configuration used is often undocumented and not versioned.

- Running the ML model in a production environment is difficult since the system is only tested in a poorly documented developer environment.

- Adapting the ML system to changing requirements is also tricky since maintainability of the code base was not a concern, and the system lacks automated tests.

Given these challenges, how do you engineer deep learning systems whilst avoiding the detrimental effects of throw-away coding and experimentation? In this article, we share the best practices developed and adopted at Kepler Vision Technologies. These practices are rooted firmly in modern-day software development practices and account for the peculiarities of developing machine learning systems. So, what is special to developing deep learning systems, compared with traditional systems?

The peculiarities of deep learning engineering

In this section we list the features of deep learning engineering that present major obstacles to the adoption of best practices from traditional software engineering. In the section following this section, we present the measures we took to make our deep learning development process more robust and efficient, overcoming (part of) the obstacles listed here.

1. Rapidly evolving deep learning frameworks

At the dawn of the deep learning revolution, models were built based on low-level libraries. Sometime later, specialized libraries began appearing to aid ML engineers, most notably with the launch of the Caffe library, founded in 2013. Three years later, a plethora of other deep learning libraries had come into existence. Scrolling forward to today, the number of different libraries continues to increase, though a group of leading front-runners has formed. Besides the birth of new individual libraries, each library itself must constantly be subjected to rapid evolution as they try to keep up with new developments in neural network research.

2. Data management

A major characteristic of deep learning systems is that the amount of input data is much larger, as is the size of an individual data item, compared with traditional software systems. This is particularly true during the training phase, in which the amount of data used is often highly substantial. Whilst it is also true that data stores that are managed by traditional software systems can become colossal in size, these systems can still be run and tested by using just a fraction of the given data. Training a deep learning system, on the other hand, can never be successful unless vast amounts of data are utilized.

3. Configuration management

Managing configuration data is an intricate art in software engineering, and best practice is that configuration data is versioned in such a way that it is clear which configuration data belongs to which version of the source code. This best practice also holds for machine learning systems, but remains hard to implement, as the size of the configuration data is so large in comparison with traditional systems. After all, the weights of a trained neural network are configuration data and can easily measure up to a total of 1Gb.

The so-called hyper-parameters used when training a deep learning model are also configuration data and must be managed in kind. As it turns out, these hyper-parameters are often scattered around the code, tuned in such a way that the precision goal can be met.

4. Testing deep learning systems

Test automation is now widely used in software development. In the deep learning world, however, test automation is not used to nearly the same extent. The reasons for this are twofold: first, deep learning developers are not properly trained in modern software engineering practices, and second that developing a deep learning model requires a great deal of experimentation.

Additionally, training a deep learning system is a non-deterministic process. This makes automated testing of the training process more difficult, since simple asserts on training outcomes will not be enough. Take into account the long training times, and it is easy to see why most deep learning engineers forgo automated testing.

Automated testing of inferencing is more straightforward. Once a model is trained only small amounts of non-determinism remain due to rounding errors.

5. Deployment of deep learning systems

Two central features distinguish deployment of deep learning systems from traditional systems: Firstly, most deep learning systems need special hardware, a GPU, to run with enough throughput. Secondly, deep learning systems require a large configuration file, the weight file, to be able to make predictions. This makes achieving dev/prod parity, that is, a similar environment for development and testing, more difficult. Moreover, it is unwise to package the weights file together with the source code in one deployment package because of its size. A result of this is that you need to deploy weight files and the system using separate artifacts, whilst keeping them in sync.

Our approach to engineering deep learning systems

Our approach is based on the guiding principle that training and running a deep learning system should be automated to the greatest possible extent. It should not be based on an individual person needing to train and evaluate the model after weeks of experimentation.

Using continuous integration to build Docker images

We use Docker to specify the development and run-time environment of our deep learning systems. When we push changes to our version control system — in our case GitHub — Travis kicks in and runs tests, and if successful, will then build the latest Docker images and push them to the Docker repository. The Docker images will not contain the model, as that would make our Docker images too big to easily distribute.

Using Docker, the run-time environment is secured together with the code and it is possible to deploy and run the system reliably in any environment supporting Docker, regardless of the deep learning framework used.

Putting cloud first

We strive for our models to be trained and evaluated on an instance in the cloud (we use Amazon AWS), since this disentangles the platform details from running our system. The Docker image approach described above, together with Amazon AWS, combine neatly together to achieve this abstraction.

Storing data in a central location

Data management is an integral part of deep learning systems. We store data and annotations in a central repository (AWS S3 buckets), and use scripts to sync data locally, such that our applications, jobs and developers have access to the latest data. Jobs always run using clean copies of data, and we deem the time taken in downloading the data well spent; this gives us certainty that the right train and test data is being used.

Storing weight files in a central location

We store weight files in a central location and pull them in if needed to start an inferencing server or an evaluation job. The weight files are updated when a training job has produced an improved version of the model. Storing weight files centrally ensures that jobs and developers have easy access to the latest model.

Storing hyper parameter configuration in a central location

We store (changes to) hyper parameters used for training and evaluation in a configuration file. This prevents these parameters from becoming scattered around the source code. We use a central location to store these configuration files so that they are accessible to jobs and developers.

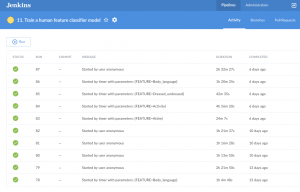

Consolidating training and evaluation into jobs

We consolidate training and evaluation into jobs using the Jenkins automation server. The jobs pull in the latest Docker images, as well as the data and annotations, and will run training or evaluation inside a Docker container. The Docker container has volumes attached which point to the data, the annotations and the model file in the case of evaluation.

After a training job has been completed, a weights file will be produced which can be stored providing it meets the KPIs of the model.

The job will consolidate all the knowledge and data needed into scripts, and anyone will be able to reliably train or evaluate a model, without needing to understand the intricacies of the model.

We do however archive the annotations files, configuration files and produced weight files so that the results of a job can be reproduced.

Using remote development features of PyCharm

It is more productive when developers can use an integrated development environment (IDE). Sometimes it can be necessary for developers to test and debug the source code and model on a GPU, which is often unavailable directly on their development machine. One way to combine using an IDE while running the code on a GPU is to start a Docker container on a machine with a GPU and then using PyCharm’s features for running a remote interpreter. A remote interpreter significantly reduces round-trip time compared with uploading source code to a remote server and add logging statements for debugging.

Using test automation

As explained above, writing effective and efficient automated tests is harder for deep learning systems than for traditional software systems. We do write automated tests for deep learning systems, using the pytest framework, but not as extensively as we would do for traditional software systems.

We focus on testing data input and on important algorithms. We also write tests for training and evaluation, but only to the extent that the code will run smoothly. We do not check that a full training run produces the correct results, as this would be too time-consuming. Instead, we write an automated test that runs one or two epochs on a very small data set, then we check that output metrics are produced but do not assert their values.

The result of our test automation effort is that our code base is easier to understand and that changes require less effort to implement.

Measuring maintainability metrics

A software system needs to be maintained in order to adapt to changing requirements and environments, and a deep learning system is no exception. The more maintainable a system is, the easier it will be to modify and extend the system. We use bettercodehub.com to check 10 software engineering guidelines and to notify us when a system drifts off course. We prefer this tool over code violation tools since it allows us to focus on the important maintainability guidelines, rather than having to deal with vast amounts of violations which a tool like PyLint will report.

Conclusion

Deep learning is a relatively new addition to the IT toolbox of organizations. It is partly rooted in traditional software development and partly in data science. Taking into account that deep learning remains in its infancy, it is unsurprising that standard engineering practices have not yet been established. The development of deep learning software is an integral part of software engineering however, and we must draw inspiration from the principles of modern software engineering as a starting point for improving deep learning software engineering.

The practices listed above have made our development process for deep learning models more robust and repeatable and have created a common ground for collaboration between deep learning practitioners and software engineers. The process is not perfect, as no process is, and we are continuously searching for ways to improve on our current working methods.

Thanks to Rob van der Leek.